-min.webp)

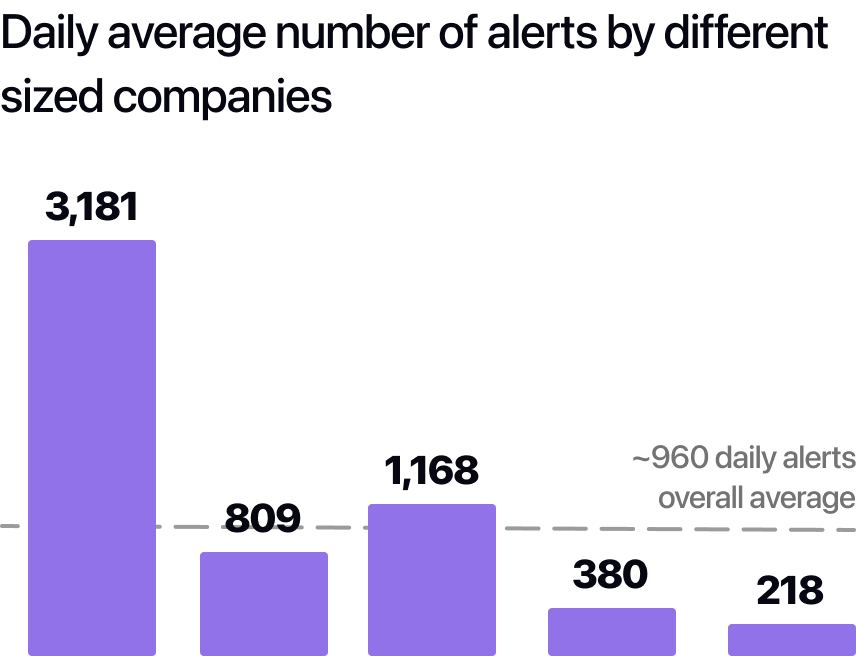

Alert fatigue is the steady erosion of analyst attention caused by a high volume of noisy or low value alerts. It leads to missed signals, longer dwell times, and inconsistent investigations. In the latest State of AI in Security Operations survey of nearly 300 CISOs, SOC leaders, and practitioners, teams reported a median of about 960 alerts per day and said roughly 40% of alerts are never investigated.

The cost shows up as increased business risk from missed or incomplete investigations as well as increased turnover and low talent retention due to low morale and analyst burnout.

Reducing alert fatigue is a cross discipline effort. You need clean detections, reliable data, a crisp workflow, strong feedback loops, and metrics that guide decisions.

AI is already in the mix: 55% of companies use some AI for alert triage and investigation today, and security leaders expect AI to handle about 60% of SOC workloads within three years. Volume matters, but signal quality and decision speed matter more.

Capture a one week snapshot before making changes. Things to track include:

Create ground truth by sampling alerts across sources. Label true positive, false positive, benign true, and duplicate. Record what context changed the decision.

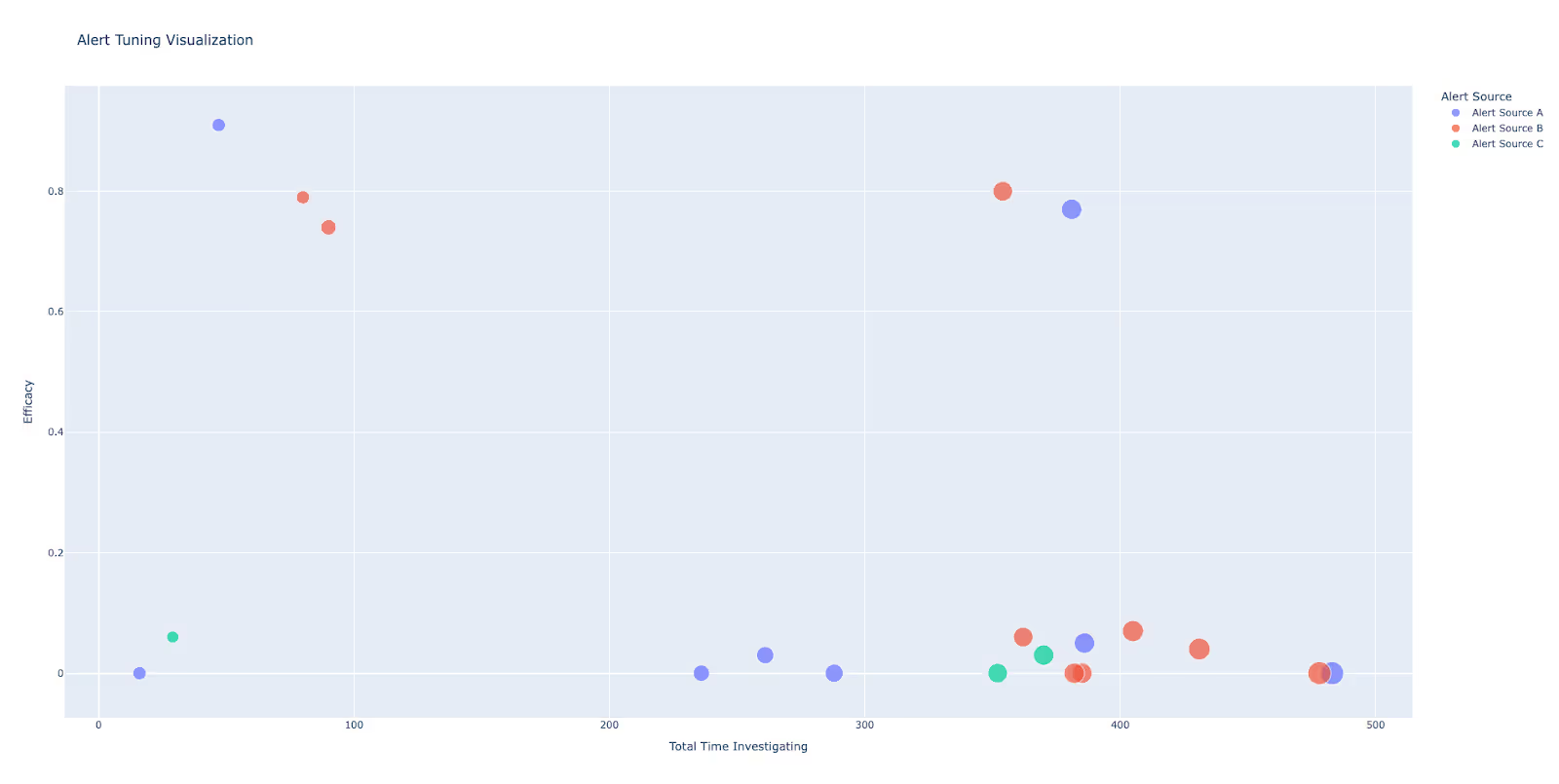

Collect a standard dataset for every high volume alert so your tuning and fatigue work share the same lens. Capture: alert name, alert source, alert count, total time investigated, median investigation time, and efficacy. These six fields expose cognitive load and poor yield.

Prioritize with a simple chart: plot efficacy on the Y axis, total time investigated on the X axis, and size points by alert count. See the below figure as an example. The lower right quadrant highlights the most impactful false positive tuning targets. Items on the left often suit light automation.

Set target thresholds tied to business risk. Example: a maximum of 30 minutes to first decision for identity alerts on privileged accounts.

Apply detection hygiene. Retire stale rules, consolidate duplicates, normalize severities, and right size thresholds. Map rules to MITRE ATT&CK to clarify intent and coverage.

Suppress and deduplicate correctly. Use time window correlation, asset level and user level aggregation, and guardrails that stop noisy detectors from firing repeatedly when a signal keeps toggling between “bad” and “normal.”

Tune vendor controls with intention. Adjust EDR sensitivity by asset class, refine email security dispositions with sandbox thresholds, and calibrate identity anomaly limits to real user behavior. Document each change and the metric you expect to move.

Before disabling or tuning, ask the same three second order questions:

Disable if the answer is no across the board. Otherwise tune and review in the next cycle.

Add a six month audit to catch vendor logic shifts or rule decay, then decide if you re enable or re tune.

Pull enrichment that changes decisions: asset criticality, data sensitivity, external exposure, identity risk, known bad indicators, exploitability, and recent change events.

Use a transparent scoring model that anyone can recalculate:

risk_score = base_severity

+ asset_criticality

+ identity_risk

+ exploitability

+ external_exposure

+ recent_change_flag (boosts risk if something just changed on the impacted asset or identity).

Normalize to a 0 to 100 scale and route by policy. Scores 80 to 100 go to senior analysts. Scores 50 to 79 go to standard triage.

Scores below 50 are candidates for auto closure after guardrail checks. Only close an alert automatically when enrichment confirms a known low risk pattern and you record an audit trail.

Make the first triage view decision ready. Show a compact evidence package with who, what, when, where, why it matters, and the next action. Link raw evidence and give a one line summary.

Define standard intake states: new, in triage, in investigation, waiting on data, resolved. Publish SLOs for time in triage and time in investigation.

Require consistent notes. A good case record includes a decision, the rationale, evidence references, and the follow up action. Short consistent notes beat long inconsistent narratives.

Give analysts a simple template to propose rule changes: rule name, problem, proposed change, expected impact.

Run weekly proposal reviews and a monthly outcome review. Add a quarterly comprehensive tuning cycle so both posts match on cadence. Keep a rollback plan for every change.

Track the effect of each change. Measure false positive rate shifts, dwell time, and coverage against mapped ATT&CK techniques. Publish a monthly summary.

Create governance that scales decisions. At acceptance time for any detection, check three gates:

Log every decision in a standing doc. If you disable a rule, move it to informational severity for auditability.

Automate enrichment, correlation, and safe closures. Fetch context, attach evidence, link related alerts, and tag duplicates.

Use AI to summarize evidence, propose testable hypotheses, and pivot across tools. Require transparency. Show data sources, list reasoning steps, and include confidence.

Baseline captured with the six field dataset. Bubble chart produced. Top ten lower right quadrant alerts tuned or retired using the three second order questions. Enrichment checklist defined. Queue states and SLOs visible.

Risk scoring and routing live. Auto closure policies in production with audit. Weekly proposal review and monthly outcomes review in place. Governance doc live with decision logs.

Quarterly comprehensive tuning executed. Metric review against targets. Regression checks on tuned rules. Short runbook published for continuous tuning and review.

Prophet Security’s Agentic AI SOC Platform lowers alert fatigue by automating evidence gathering, correlation, and safe closures with an audit trail. Prophet AI enriches every alert with asset, identity, and exposure context to determine the severity of each alert. The platform explains its reasoning and links source data so teams can validate decisions and meet audit needs. Teams use it to lower false positives, cut dwell time, and increase investigation throughput by 10x without adding headcount. Request a demo of Prophet AI to see it in action.